ReScript vs TypeScript: Building a Concurrent Queue library

We'll write a mission-critical piece of code in both TypeScript and ReScript and compare the process and results, and find out what TypeScript still holds over ReScript!

In this issue, we're going to implement a small library in both TypeScript and ReScript, a TypeScript alternative. The goal is to give you some evidence of whether your next mission-critical module should be written in TypeScript, or if you should start looking into ReScript.

By mission-critical I mean a piece of code that needs to be right because the cost of it being wrong is just too high. You can imagine this being a different piece of an app, depending on the app. For example, on an e-commerce app, this would likely be the payment processing code or the checkout code. In this case, we need to build a queue to do a bunch of work.

The comparison will be done considering:

- the Developer Experience – or how painful/glorious it was for me to write things out and test them out.

- the Artefact – or how hard is it to make sense of the generated code

- and how easy is it to Use From JS (Interop)

In both cases, we will limit ourselves to as idiomatic and straightforward code as possible. So we'll refrain from complex type-level computation in TypeScript and esoteric types or functional programming constructs in ReScript.

You know, keep it practical. I've actually had to write this queue for work before.

The Challenge: A Concurrent Queue

We will build a Concurrent Queue to execute a number of tasks with controllable concurrency. This is useful for situations where we can upfront generate a lot of work, but we want to control how fast we go through it and keep track of what has succeeded and failed.

Try running fetch in a loop and let me know what you find.

This queue behaves as follows:

- When created, it requires a maximum number of tasks to run concurrently, and the tasks

const queue = new ConcurrentQueue([

async () => console.log("I'm task 1!"),

async () => console.log("I'm task 2!"),

async () => console.log("I'm task 3!"),

], {

// execute at most 2 tasks at a time

maxConcurrency: 2

})- At any point in time, we can await the result of all tasks.

let results = await queue.run()

console.log("Results: ", results)A Concurrent Queue in TypeScript

TypeScript isn't my day-to-day language, so I enlisted the help of a few friends who do write TypeScript for a living. They helped me review and refactor this code until it was idiomatic for their codebases. Or at least until they felt they'd approve it in a PR at work.

Since my day-to-day involves a lot of impure functional programming, I started off with a functional-style program that recurses and relies on mutation. One of the suggestions I got was to try a class-based approach that would fit better how they think of the language. The other suggestion was to try to do this without recursion and just use a for loop. So I did those 3 and here they are.

The Code: Functional Style

My first attempt looked a little bit like this.

const doWork = async (tasks, results) => {

let nextTask = tasks.unshift();

if (nextTask === undefined) return "done";

let result = await nextTask();

results.push(result);

return doWork(tasks, results);

};

const queue = async (tasks, opts = { maxConcurrency: 1 }) => {

let results = new Array(tasks.length);

const workers = new Array(opts.maxConcurrency)

.fill(0)

.map((_) => doWork(tasks, results));

await Promise.all(workers);

return results;

};

export default queue;We start with an array of tasks and immediately build two things: an array to save the results, and an array of workers that'll run the actual tasks. Then we await for all the workers to be finished, and once we are, we return the results.

There are more functional ways of doing this, for example by making the workers carry around an accumulator where they put the results instead of mutating the results array.

Every worker in the workers array is just a promise that keeps promising. So the first thing a worker does is try to get a task ( tasks.unshift() ), if there's no task then we're done! If we do find a task, we'll just call it and wait for it to be finished. Push the result into our results array, and repeat by recursing.

It took some of my reviewers a minute to go through this because they associated recursion with immutability, but after that hop, everyone could understand what was happening.

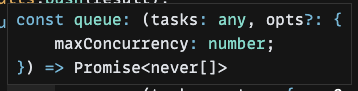

However, this naive attempt had a few usage issues. The first time I tried to use it, I passed whatever value and the type-checker was happy with it. Inspecting the inferred types I found that the public function looks like this.

Digging in a little further, not even the internal function had the correct types!

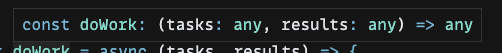

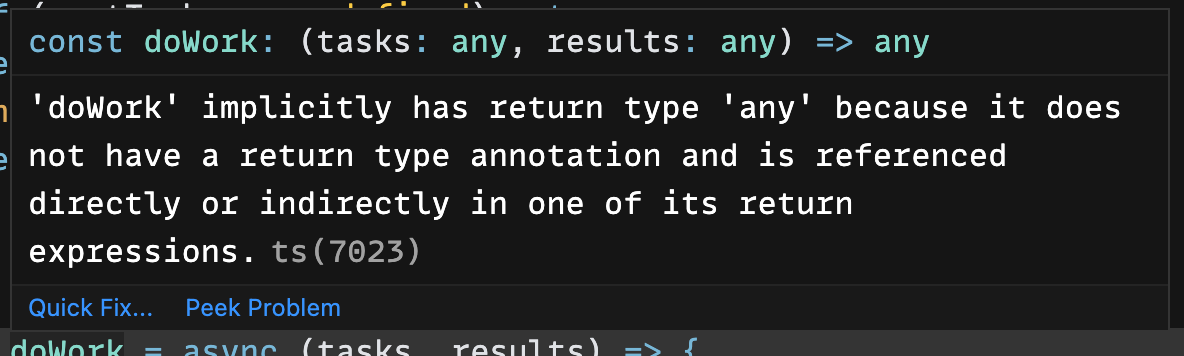

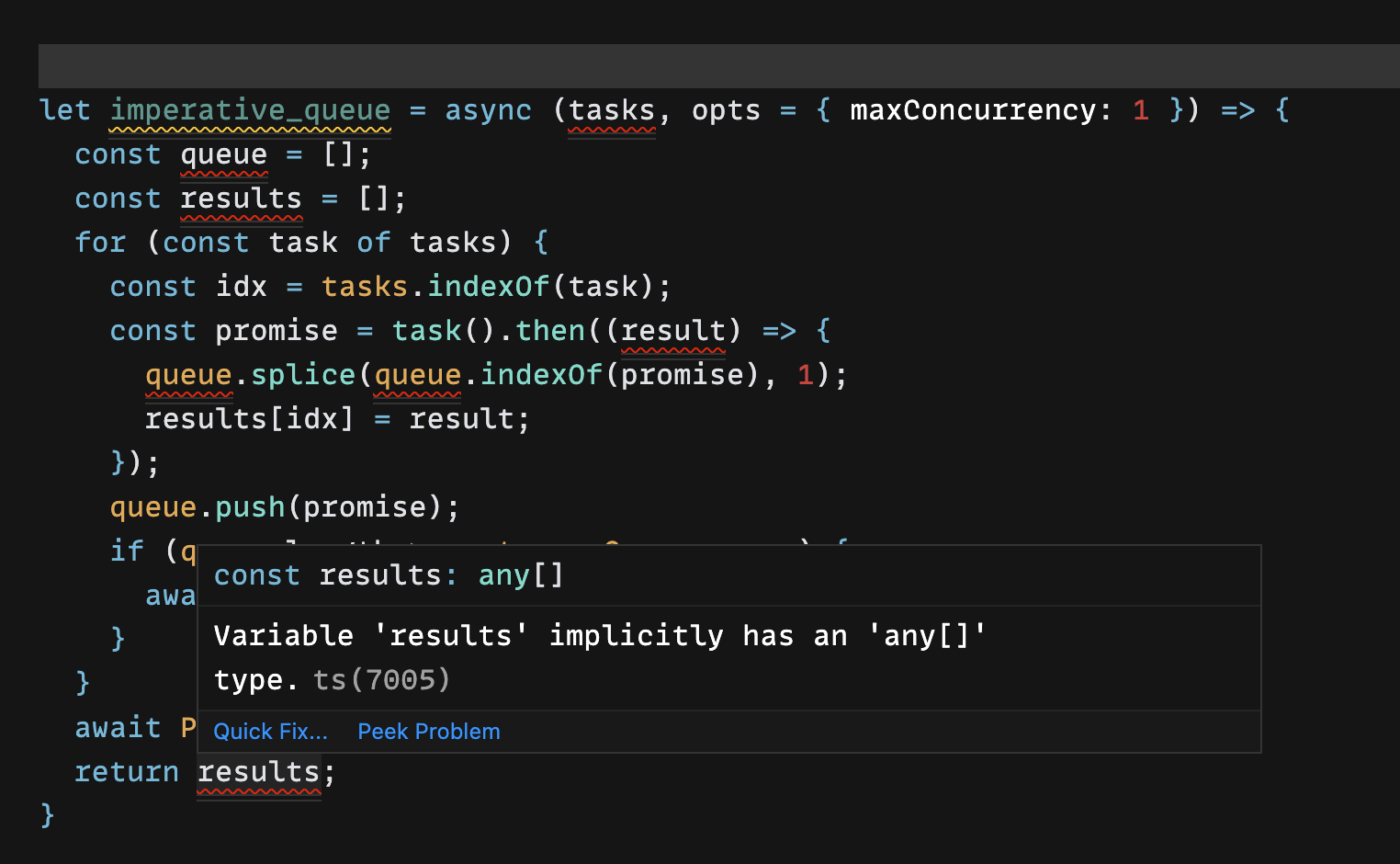

So naturally, I double-checked the settings and I found that I needed to use the noImplicitAny setting. And once I turned that on, I encountered this.

The type inference felt just so useless that I decided to do the typing in my head instead. I annotated both functions, but to do I needed to go from arrow function ( x => x ) syntax to full function syntax ( function x() { return x } ). It is a small thing, but yes it gets in the way of me just typing something and getting good feedback from the compiler. Here's the code I came up with.

type Task<R> = () => Promise<R>;

interface QueueOpts = {

maxConcurrency: Number;

};

async function doWork<R>(tasks: Task<R>[], results: R[]): Promise<void> {

let nextTask = tasks.shift();

if (nextTask === undefined) return;

let result = await nextTask();

results.push(result);

return doWork(tasks, results);

}

async function queue<R>(

tasks: Task<R>[],

opts: QueueOpts = { maxConcurrency: 1 }

): Promise<R[]> {

let results: R[] = new Array(tasks.length);

const workers = new Array(opts.maxConcurrency)

.fill(0)

.map((_) => doWork(tasks, results));

await Promise.all(workers);

return results;

}

export default queue;The Code: Imperative Style

The second style I tried was to replace the recursion with a for loop. I ended up using a for-of loop to iterate over the tasks. Here's my first attempt.

let imperative_queue = async (tasks, opts = { maxConcurrency: 1 }) => {

const queue = [];

const results = [];

for (const task of tasks) {

const idx = tasks.indexOf(task);

const promise = task().then((result) => {

queue.splice(queue.indexOf(promise), 1);

results[idx] = result;

});

queue.push(promise);

if (queue.length >= opts.maxConcurrency) {

await Promise.race(queue);

}

}

await Promise.all(queue);

return results;

}We managed to do something that I thought was clever: we keep a little array of ongoing promises, and each one of them will remove themselves from this array as soon as they are done. This means that we can use that array to check if we've hit the concurrency limit, and in case we have, wait for a slot to open before we can continue and process a new task.

This approach had one subtle bug that was hard to find: originally I had forgotten to remove the promise from the queue once it resolved, but this seemed to work just fine because my tests were too small, and because Promise.race(queue) was always being called, and it always returned immediately, so progress was always being made. Had I had a larger test suite or more intense tasks it would've been more obvious what was going on.

Sadly, the inference here was not any better. Essentially everything failed to type correctly and was set to any. You can see all the red squiggly lines in the image below, where the inference said I guess that's an any. Bummer, time to annotate again. And yes, this also means rewriting from arrow function syntax to full function syntax!

There we go.

export async function imperative_queue<T>(

tasks: Task<T>[],

opts: QueueOpts = { maxConcurrency: 1 }

): Promise<T[]> {

const queue: Promise<void>[] = [];

const results: T[] = new Array(tasks.length);

for (const task of tasks) {

const idx = tasks.indexOf(task);

const promise = task().then((result) => {

queue.splice(queue.indexOf(promise), 1);

results[idx] = result;

});

queue.push(promise);

if (queue.length >= opts.maxConcurrency) {

await Promise.race(queue);

}

}

await Promise.all(queue);

return results;

}The Code: OOP Style

Then I overcorrected and went full OOP on this, just to understand whether the experience would be any better in any way. My initial attempt is here below and reuses the first functional approach internally.

interface QueueOpts = {

maxConcurrency: Number;

};

type Task<R> = () => Promise<R>;

class ConcurrentQueue<R> {

opts: QueueOpts = {

maxConcurrency: 1

};

tasks: Task<R>[] = [];

results: R[] = [];

constructor(opts: QueueOpts) {

this.opts = opts;

}

public async run(tasks: Task<R>[]): Promise<R[]> {

this.tasks = tasks;

const workers: Promise<void>[] = new Array(this.opts.maxConcurrency)

.fill(0)

.map((_) => this.doWork());

await Promise.all(workers);

return this.results;

}

async doWork(): Promise<void> {

let nextTask = this.tasks.shift();

if (nextTask === undefined) return;

let result = await nextTask();

this.results.push(result);

return this.doWork();

}

}Turns out that the inference wasn't any better, and I had to annotate everything anyways.

The Developer Experience

After writing and trying out these 3 solutions, I had a rough idea of what the developer experience would be like for code of this kind.

The inference was very problematic. Being forced to annotate things means that every time I change some of the functions, I have to make sure to update the type annotations. If the changes were on the input or output types, I'd have to update every other annotation that depended on these ones. This will just add more work for me as a programmer, and as an extremely lazy one, I take this extremely seriously.

I think what really made things better here was that out-of-the-box the autocompletion for most things was excellent. This made it possible for me to keep writing code even when the types were off. Being able to write things I know are wrong and fix them later on is actually very nice.

Lastly, first-class support for async/await is not something to take lightly. It made the functions incredibly clean, and I have no idea how I'd have written the for-of without it!

The Artefact

There's actually not a lot to be said here. When I compiled to ES6, the output was the same code, without the type annotations. So this is really just as good/bad as you write the code.

When calling from TypeScript, assuming that the calling code has the same strictness checks, this looks like it'd be safe. I tried passing in the wrong values and the compiler did complain accordingly.

The Interop

Because the artifact is exactly the same as just vanilla ES6 JavaScript, we can see that the Interop here would be straightforward. Exact same API.

A Concurrent Queue in ReScript

The Code

I set out to write this as close to the original one I wrote for work, but then I decided to rewrite the TypeScript one. The main reason is that ReScript comes with a small standard library called Belt which has a few useful utilities, such as a MutableQueue, which I used. However, using that would be cheating a little 😉, so I went back to specifying the tasks as just an Array.

Here's the code. It has only one type definition, for the options object that specifies the maximum concurrency. Everything else was inferred correctly the first time.

// file: ConcurrentQueue.res

type opts = {maxConcurrency: int}

let run = (~opts={maxConcurrency: 1}, t) => {

let results = []

let rec doWork = () => {

switch Js.Array.shift(t) {

| None => Js.Promise.resolve()

| Some(fn) =>

fn(.) |> Js.Promise.then_(result => {

results->Js.Array2.push(result)->ignore

doWork()

})

}

}

let workers = Belt.Array.make(opts.maxConcurrency, ())->Js.Array2.map(doWork)

Js.Promise.all(workers) |> Js.Promise.then_(_ => Js.Promise.resolve(results))

}There are 2 things that pop up immediately when looking at this code.

First, the type system does not allow the broken behavior of the .then call for Promises in Javascript, so we need to call the resolve function explicitly.

Second, once we get our hands on a task, we call it like fn(.). This notation says that this is a function that is applied to all of its arguments. Normally this isn't required, and we'd call fn(), but in this case, we're writing something explicitly to be consumed from the JavaScript side, so this would make sure we keep aside all the infrastructure for currying functions. We'll talk more about this when we examine the generated code.

1. It is a map function that just changes the type of the value inside the promise. If you think of a Promise as an Array that is empty until the value is resolved, and then it has a single element, then .then in some way lets you do the same thing as array.map(f)

2. It is a flatMap function that merges the wrapping Promise around a value. To me, it's always been more intuitive to think of this as a flatten where if you do array.map(x => [x]).flatten() then the extra Array around every value goes away.

Anyway, this sort of behavior is not possible to type correctly in most type-systems. Not in OCaml, not in Haskell, not in Scala, not in Rust, and also not in ReScript.

Usage here is pretty much the same as the TypeScript version. We define our tasks, and I've ported over the same log function we used to delay the logging of a string. Then we call our function to run the tasks and pass in an object that defines the concurrency.

The thing that's sticking out like a sore thumb is all of the Promise stuff, and the (.) => in the task functions. This is ReScript's way of saying "this is a function that should not be curried".

let tasks = [

(.) => log(5000, "I'm (even later) task #1!"),

(.) => log(1000, "I'm (late) task #2!"),

(.) => log(300, "I'm task #3!"),

(.) => log(400, "I'm task #4!"),

(.) => log(200, "I'm task #5!"),

(.) => log(500, "I'm task #6!"),

(.) => log(50, "I'm task #7!"),

(.) => log(1, "I'm task #8!"),

]

let _ = ConcurrentQueue.run(

tasks,

~opts={

maxConcurrency: 2,

},

) |> Promise.then_(results => Js.log(results)->Promise.resolve)The Developer Experience

I'm strongly biased towards ReScript here, since I'm writing not just this blog but also a book, and I've got over a handful of years writing OCaml as well. So with this disclaimer in mind, here's what I found easy and what I found hard.

Type inference is just amazing. I didn't have to annotate anything, it just figured out what I was trying to do and helped make sure I didn't contradict my prior decisions. The type of a task was inferred correctly to be unit => Js.Promise.t<'a>, and everything in the tasks array has the same 'a , so all tasks return the same kind of value.

The stuff that did not work well, was actually pretty painful.

Lack of async/await was the first thing I picked up. After writing a little TypeScript, this is the thing that's most obviously needed in ReScript. It would make so much of the code above look and feel like the TypeScript you'd write. For example, if we could rewrite this.

// before async/await

fn(.) |> Promise.then_(result => {

results->Js.Array2.push(Some(result))->ignore

doWork()

})

// with async/await

let result = await fn(.)

results->Js.Array2.push(Some(result))->ignore

doWork()The next thing is the many ways the standard library has to do some things. Right now you can pick from Js.Array, Js.Array2, and Belt.Array. The core differences are that Js.Array asks for the array on the first argument, whereas the other two ask for the array in the last argument.

This may sound trivial, but it has implications on how you design APIs to prefer pipe-first or pipe-last, and to favor point-free programming or better inference.

If you'd like to learn more about this, don't miss out on a copy of Practical ReScript where I'll help you build a good intuition of how the type system works, and how to make it work for you.

The last thing that pops up is that sometimes we are using -> and sometimes we are using |>. This of course is related to the last point, but it also is an indication that you have to remember what style of programming is preferred by the library. Sometimes this means a library just stands out unnecessarily. In this case, it's the Js.Promise API.

The Artefact

When we look at the generated JavaScript, it is always a little scary. In this case, it doesn't actually look that much different than the TypeScript we wrote by hand before.

// Generated by ReScript, PLEASE EDIT WITH CARE

import * as Belt_Array from "rescript/lib/es6/belt_Array.js";

function run(optsOpt, t) {

var opts = optsOpt !== undefined ? optsOpt : ({

maxConcurrency: 1

});

var results = [];

var doWork = function (param) {

var fn = t.shift();

if (fn !== undefined) {

return fn().then(function (result) {

results.push(result);

return doWork(undefined);

});

} else {

return Promise.resolve(undefined);

}

};

var workers = Belt_Array.make(opts.maxConcurrency, undefined).map(doWork);

return Promise.all(workers).then(function (param) {

return Promise.resolve(results);

});

}

export {

run ,

}

/* No side effect */The first strange thing is that there are a few undefined's sprinkled throughout the code. These are ReScript's ways of encoding the unit value and they're mostly harmless.

The second strange thing in here is that we are calling one of ReScript's own libraries: Belt_Array.make.

Belt_Array.make is a wrapper for creating an array and making sure it has a specific type of value. In this case, our code used the unit value, so we created arrays like [(), (), (), ...] just so we could then call our doWork function.

Another point to bring up is the fn(.) that we spoke of before. Thanks to that little . that tells the type system this is a function that should not be curried, we can just call it without any other checks. If we refactor our queue code and remove the ., the generated code will look a little bit more like this.

if (fn !== undefined) {

return Curry._1(fn, undefined).then(function (result) {

results.push(result);

return doWork(undefined);

});

}Notice how we're passing the fn function to a special function in the Curry module. This function knows how to deal with automated currying.

Now that we understand these things and see they are harmless implementation details, we can see that the generated code is going to be roughly equivalent to the code we have manually written.

This is one of the key selling points of ReScript.

The Interop

As we can see from the generated code, the interop from the JS side will be pretty close to what's expected of TypeScript. There's a single function that receives an array of functions that return promises, and a configuration option to set how many promises to run at once.

If we wanted to run this code from some other ReScript, there's no need to worry about the compiler settings, since the type system is not configurable like in TypeScript.

When trying to consume this library from TypeScript, we need to make one tiny change to our code to generate the appropriate TypeScript definitions: just add the @genType macro to the functions we to expose. Then we get a file like this, that defines our opts type and our run function type so you can call it correctly.

/* TypeScript file generated from ConcurrentQueue.res by genType. */

/* eslint-disable import/first */

// @ts-ignore: Implicit any on import

import * as Curry__Es6Import from 'rescript/lib/es6/curry.js';

const Curry: any = Curry__Es6Import;

// @ts-ignore: Implicit any on import

import * as ConcurrentQueueBS__Es6Import from './ConcurrentQueue.bs';

const ConcurrentQueueBS: any = ConcurrentQueueBS__Es6Import;

// tslint:disable-next-line:interface-over-type-literal

export type opts = { readonly maxConcurrency: number };

export const run: <T1>(

_1:{ readonly opts?: opts },

_2:Array<(() => Promise<T1>)>

) => Promise<T1[]> = function <T1>(Arg1: any, Arg2: any) {

const result = Curry._2(ConcurrentQueueBS.run, Arg1.opts, Arg2);

return result

};Not as straightforward, but at least we are getting the right types on the TypeScript side!

In this blog, we will deal with many aspects of typing that will help you ship amazing products 🚀

Conclusion

All in all, writing TypeScript still felt faster than writing ReScript. I knew that some of the code I was writing was completely unsafe, so that made me very uneasy. Perhaps for the bulk of some applications, this is fine, since frameworks like React can take the hit of failing quickly and helping me fix these bugs. But for framework-agnostic code that implements significant functionality...I don't know if I'd rather feel faster than know I'm safe.

So my recommendations to myself after doing this exercise are here.

- If you're writing mission-critical code that must be correct, and you want to make sure you have zero type errors, I think ReScript is the right choice. There is a cost to writing this (as we saw above), but it gives you enough flexibility to produce the exact JavaScript and TypeScript you need while sacrificing zero of the type safety.

The exception here is if your code is Promise-heavy, then I'd really consider staying with TypeScript for now, at least until ReScript gets properasync/awaitsupport. The reason is that the more complex work you need to do with Promises, the more you'll feel the burden of->vs|>, the limitations of the Promise library shipped with the standard library, etc. Right now it really feels like TypeScript is winning there. - For general application code that isn't mission-critical, TypeScript is most likely the better alternative right now since you will avoid a lot of the cost of writing (as we saw above). I'd still like to run another experiment like this to see what are the obvious short-term and long-term trade-offs that we make by choosing one over the other.

The experience is just so much better when it comes to maintaining complex types (hint: the compiler does most of the work) and ensuring that things are as correct as they can be.

I do get the point that it will be harder to find contributors, and ultimately building open source software is a social activity. So we need people.

The question is, would ironing these wrinkles help here? If we had first-class support for async/await, and a single blessed way in the standard library, would this help adoption in the lower, core tiers of web infrastructure?

Now it's your turn to try and build something between TypeScript and ReScript and do some comparisons of your experience, and don't forget to tell me how it went on Twitter! Just @ me: @leostera

See you on the next issue of Practical ReScript 👋🏽

Acknowledgments

Thanks to @p1xelHer0, @Alecaceresprado, and everyone else who helped me review the TypeScript in this post.